Are large-scale Node.js systems possible? Empirically, the answer is yes. Walmart and Paypal have both shown that it can be done. The quick criticism is that you need 10X engineers. This a classic, and well-founded criticism. New ways of doing things are often found to be exceptionally productive, precisely because brilliant minds self-select for the new and interesting.

So let’s rephrase the question. Are large-scale Node.js systems possible with mainstream developers? If you believe that these large-scale Node.js systems will resemble the large-scale Java and .Net systems you have known and loved, then the answer is, emphatically, no. JavaScript is far too weak a language to support the complexity inherent in systems of such scale. It’s not exactly type-safe, and half the language is unusable. There’s a reason the best-selling book on the language is called JavaScript, The Good Parts.

Despite this, we’ve managed to build quite a few large-scale systems at my company, nearForm. Here’s what we’ve learned, and how we do it.

The defining attribute of most large-scale, mainstream traditional systems is that they are monolithic. That is, a single large codebase, with many files, thousands of classes, and innumerable configuration files (in XML, if you’re lucky). Trying to build a system like this in JavaScript is indeed the path to madness. The visceral rejection of Node.js that you see from some quarters is often the spidey-sense of an experienced enterprise developer zapping them between the eyes. JavaScript? No! This reaction is entirely justified. Java and .Net have been designed to survive enterprise development. They enable monolithic architecture.

There are of course systems built in Java and .Net that are not monolithic, that are more structured. I’ve built in both styles myself. But it takes effort, and even the best systems fall to technical debt over time. It’s too easy to fall back into the monolithic trap.

Monolithic Systems are Bad

What is so bad about monolithic systems anyway? What does it mean for a system to be “monolithic”? The simplest definition is a system that cannot survive the loss of any of its parts. You pull one part out, and the whole thing fails. Each part is connected to the others, and interdependent on them.

The term monolith means single stone, and is derived from the ancient greek. The ancient city of Petra in modern-day Jordan is one of the best examples of monolithic architecture. Its buildings are constructed in one piece, hewn directly from the cliff face of a rocky mountain. It also provides a perfect example of the failure mode of monolithic systems. In 363AD an earthquake damaged many of the buildings, and the complex system of aqueducts. As these were carved directly into the mountain, they were impossible to repair, and the city fell into terminal decline.

So it goes with monolithic software. Technical debt, the complexity built into the system over time, makes the system impossible to repair or extend at reasonable cost as the environment changes. You end up with things like month-long code freezes in December so that the crucial Christmas shopping season is not affected by unknowable side-effects.

The other effect of monolithic software is more pernicious. It generates software development processes and methodologies. Because the system has so many dependencies, you must be very careful how you let developers change it. A lot of effort must be expended to prevent breakage. Our current approaches, from waterfall to agile, serve simply to enable monolithic systems. They enable us to build bigger and add more complexity. Even unit testing is an enabler. You thought unit testing was the good guy? It’s not. If you do it properly, it just lets you build bigger, not smarter.

Modular Systems are Good

So what are we supposed to do, as software developers, to avoid building monolithic systems. There are no prizes for knowing the answer. Build modular systems! The definition of a modular system is simply the inverse: each part stands alone, and the system is still useful when parts are missing.

Modular software should therefore be composed of components, each, by definition, reusable in many contexts. The idea of reusable software components is one of the Holy Grails of software development.

The greatest modular system humanity has created to date is the intermodal shipping container. This is a steel box that comes in a standard set of sizes, most commonly 8′ wide, 8’6” tall, and 20 or 40 feet long. This standardisation enables huge efficiency in the transport of goods. Each container is re-usable and has a standardised “API”, so to speak.

Sadly, software components are nothing like this. Each must necessarily have it’s own API. There are dependency hierarchies. There are versioning issues. Nonetheless, we persist in trying to build modular systems, because we know it is the only real way to deal with complexity.

There have been some success stories, mostly at the infrastructure level. UNIX pipes, and the UNIX philosophy of small programs that communicate over pipes, works rather well in practice. But it only takes you so far.

Other attempts, such as CORBA, or Microsoft’s OLE, have suffered under their own weight. We’ve grown rather fond of JSON-based REST services in recent years. Anyone who’s been at the mercy of third party APIs, and I’m looking at you, Facebook, will know that this is no promised-land either.

Objects are Hell

The one big idea for modular software that seems to have stuck to the wall, is the object-oriented paradigm.

Objects are supposed to be reusable representations of the world, both abstract and real. The tools of object-oriented development; interfaces, inheritance, polymorphism, dynamic methods, and so on, are supposed to provide us with the power to represent anything we need to build. These tools are supposed to enable us to build objects in a modular reusable way.

The fundamental idea of objects is really quite broken when you think about it. The object approach is to break the world into discrete entities with well-defined properties. This assumes that the world will agree to being broken up in such a way. Anyone who has tried to create an well-designed inheritance hierarchy will be familiar with how this falls apart.

Let’s say we have a Ball class, representing, well, a ball. We then define a BouncingBall, and a RollingBall, both inheriting from the base Ball class, each having suitable extensions of properties and methods. What happens when we need a ball than can both bounce and roll

Admittedly, inheritance is an easy target for criticism, and the solution to this problem is well-understood. Behaviours (bouncing and rolling) are not essential things, and should be composed instead. That this is known does not prevent a great deal of inheritance making it’s way into production systems. So the problem remains.

Objects are really much worse than you think. They are derived from a naïve mathematical view of the world. The idea that there are sets of pure, platonic things, all of which share the same properties and characteristics. On the surface this seems reasonable. Scratch the surface and you find that this idea breaks down in the face of the real world. The real world is messy. It even breaks down in the mathematical world. Does the set of all sets that do not contain themselves contain itself? You tell me.

The ultimate weakness of objects is that they are simply enablers for more monolithic development. Think about it. Objects are grab bags of everything a system needs. You have properties, private and public. You have methods, perhaps overridden above or below. You have state. There are countless systems suffering from the Big Ball of Mud anti-pattern, where a few enormous classes contain most of the tangled logic. There are just too many different kinds of thing that can go into an object.

But objects have one last line of defense. Design patterns! Let’s take a closer look at what design patterns can do for us.

Bad Patterns are Beautiful

In 1783 Count Hans Axel von Fersen commissioned a pocket watch for the then Queen of France, Marie Antoinette. The count was known to have had a rather close relationship with the Queen, and the extravagance of the pocket watch suggests it was very close indeed. The watch was to contain every possible chronometric feature of the day; a stopwatch, an audible chime, a power meter, and a thermometer, among others. The master watchmaker, Abraham-Louis Breguet was tasked with the project. Neither Marie Antoinette, Count Fersen, nor Breguet himself lived to see the watch completed. It was finally finished in 1837, by Breguet’s son. It is one of the most beautiful projects to have been delivered late and over-budget.

It is not for nothing that additional features beyond basic time keeping are known as complications in the jargon of the watchmaker. The watches themselves possess a strange property. The more complex they are, the more intricate, the more monolithic, the more beautiful they are considered. But they are not baroque without purpose. Form must follow function. The complexity is necessary, given their mechanical nature.

We accept this because the watches are singular pieces of artistry. You would find yourself under the guillotine along with Marie Antoinette in short order if you tried to justify contemporary software projects as singular pieces of artistry. And yet, as software developers, we revel in the intricacies we can build. The depth of patterns that we can apply. The architectures we can compose.

The complexity of the Marie Antoinette is seductive. It is self-justifying. Our overly complex software is seductive in the same way. What journeyman programmer has not launched headlong into a grand architecture, obsessed by the aesthetic of their newly imagined design? The intricacy is compounded by the failings of their chosen language and platform.

If you have built systems using one of the major object-oriented languages, you will have experienced this. To build a system of any significant size, you must roll out your Gang-of-Four design patterns. We are all so grateful for this bible that we have forgotten to ask a basic question. Why are design patterns needed at all? Why do you need to know 100+ patterns to use objects safely? This is code smell!

Just because the patterns work, does not mean they are good. We are treating the symptoms, not the disease. There is truth in the old joke that all programming languages end up being incomplete, buggy versions of LISP. That’s pretty much what design patterns are doing for you. This is not an endorsement of functional programming either, or any language structure. They all have similar failings. I’m just having a go at the object-oriented languages because it’s easy!

Just because you can use design patterns in the right way does not mean using design patterns is the right thing to do. There is something fundamentally wrong with languages that need design patterns, and I think I know what it is.

But before we get into that, let’s take a look at a few things that have the right smell. Let’s take a look at the Node.js module system.

Node.js Modules are Sweet

If you’ve built systems in Java or .Net, you’ll have run into the dreaded problem of dependency hell. You’re trying to use component A, which depends on version 1 of component C. But you’re also trying to use component B, which depends on version 2 of component C. You end up stuck in a catch-22, and all of the solutions are a kludge. Other platforms, like Ruby or Google’s new Go language may make it easier to find and install components, but they don’t solve this problem either.

As an accident of history, JavaScript has no built-in module system (at least, not yet). This weakness has turned out to be a hidden strength. Not only has it created the opportunity to experiment with different approaches to defining modules, but it also means that all JavaScript module systems must survive within the constraints of the language. Modules end up being local variables that do not pollute the global namespace. This means that module A can load version 1 of module C, and module B can load version 2 of module C, and everything still works.

The Node Package Manager, npm, provides the infrastructure necessary to use modules in practice. As a result, Node.js projects suffer very little dependency hell. Further, it means that Node.js modules can afford to be small, and have many dependencies. You end up with a large number of small focused modules, rather than a limited set of popular modules. In other platforms, this limited set of popular modules end up being monolithic because they need to be self-sufficient and do as much as possible. Having dependencies would be death.

Modules also naturally tend to have a much lower public API to code ratio. They are far more encapsulated than objects. You can’t as a rule misuse them in the same way objects can be misused. The only real way to extend modules is to compose them into new modules, and that’s a good thing.

The Node.js module system, as implemented by npm, is the closest anybody has come in a long time to a safe mechanism for software re-use. At least half the value of the entire Node.js platform lies in npm. You can see this from the exponential growth rate of the number of modules, and the amount of downloads.

Node.js Patterns are Simple

If you count the number of spirals in the seed pattern at the centre of a sunflower, you’ll always end up with a fibonacci number. This is a famous mathematical number sequence, where the next fibonacci number is equal to the sum of the previous two. You start with 0 and 1, and it continues 1, 2, 3, 5, 8, 13, 21, 34, and so on. The sequence grows quickly, and calculating later fibonacci numbers is CPU intensive due to their size.

There’s a famous blog post attacking Node.js for being a terrible idea. An example is given of a recursive algorithm to calculate fibonacci numbers. As this is particularly CPU intensive, and as Node.js only has one thread, the performance of this fibonacci service is terrible. Many rebuttals and proposed solutions later, it is still the case that Node.js is single-threaded, and CPU intensive work will still kill your server.

If you come from a language that supports threads, this seems like a killer blow. How can you possibly build real systems? The are two things that you do. You delegate concurrency to the operating system, using processes instead of threads. And you avoid CPU intensive tasks in code that needs to respond quickly. Put that work on a queue and handle it asynchronously. This turns out to be more than sufficient in practice.

Threads are notoriously difficult things to get right. Node.js wins by avoiding them altogether. Your code becomes much easier to reason about.

This is the rule for many things in Node, when compared to object-oriented languages. There is simply no need for a great many of the patterns and architectures. There was a discussion recently on the Node.js mailing list about how to implement the singleton pattern in JavaScript. While you can do this in JavaScript using prototypical inheritance, there’s really very little need in practice, because modules tend to look after these things internally. In fact, the best way to achieve the same thing using Node.js is to implement a standalone service that other parts of your system communicate with over the network.

Node.js does require you to learn some new patterns, but they are few in number, and have broad application. The most iconic is the callback pattern, where you provide a function that will be called when the system has more data for you to work with. The signature of this function is always: an error object first, if there was an error. Otherwise the first argument is null. The second argument is always the result data.

The callback function arises naturally from the event handling loop that Node.js uses to dispatch data as it comes in and out of the system. JavaScript, the language, designed for handling user interface events in the browser, turns out to be well-suited to handling data events on the server-side as a result.

The first thing you end up doing with Node.js when you start to use it is to to create callback spaghetti. You end up with massively indented code, with callback within callback. After some practice you quickly learn to structure your code using well-named functions, chaining, and libraries like the async module. In practice, callbacks, while they take some getting used to, do not cause any real problems.

What you do get is a common interface structure for almost all module APIs. This is in stark contrast to the number of different ways you can interact with object-oriented APIs. The learning surface is greatly reduced.

The other great pattern in Node.js is streams. These are baked into the core APIs, and they let you manipulate and transform data easily and succinctly. Data flows are such a common requirement that you will find the stream concept used all over the place. As with callbacks, the basic structure is very simple. You pipe data from one stream to another. You compose data manipulations by building up sets of streams connected by pipes. You can even have duplex streams that can read and write data in both directions. This abstraction leads to very clean code.

Because JavaScript is a semi-functional language, and because it does not provide all the trappings of traditional object-oriented code, you end up with a much smaller set of core patterns. Once you learn them, you can read and understand most of the code you see. It is not uncommon in Node.js projects to review the code of third party modules to gain a greater understanding of how they work. The effort you need to expend to do this is substantially less than for other platforms.

Thinking at the Right Level

Our programming languages should let us think at the right level, the level of the problems we are trying to solve. Most languages fail miserably at this. To use an analogy, we’d like to think in terms of beer, but we end up thinking in terms of the grains that were used to brew the beer.

Our abstractions are at too low a level, or end up being inappropriate. Our languages do not enable us to easily compose their low level elements into things at the right level. The complexity in doing so trips us up, and we end up with broken, leaky abstractions.

Most of the time, when we build things with software, we are trying to model use cases. We are trying to model things that happen in the world. The underlying entities are less important. There is an important data point in the observation that beginning programmers write naïve procedural code, and only later learn to create appropriate data structures. This is telling us something about the human mind. We are able to get things done by using our intelligence to accommodate differences in the entities that make up our world.

A bean-bag chair is still a chair. Every human knows how to sit in one. It has no back, and no legs, but you can still perform the use-case: sitting. If you’ve modeled a chair as a object with well-defined properties, such as assuming it has legs, you fail in cases like these.

We know that the code to send an email should not be tightly coupled to the API of the particular email sending service we are using. And yet if you create an abstract email sending API layer, it inevitably breaks when you change the implementation because you can’t anticipate all the variants needed. It’s much better to be able to say, “send this email, here’s everything I’ve got, you figure it out!”

To build large-scale systems you need to represent this action-oriented way of looking at the world. This is why design patterns fail. They are all about static representations of perfect ontologies. The world does not work like that. Our brains do not work like that.

How does this play out in practice? What are the new “design patterns”? In our client projects, we use two main tools: micro-services, and pattern matching.

Micro-Services Scale

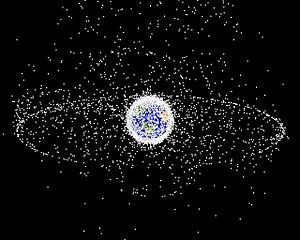

We can use biological cells as an inspiration for building robust scalable systems. Biological cells have a number of interesting properties. They are small and single-purpose. There are many of them. They communicate using messages. Death is expected and natural.

Let’s apply this to our software systems. Instead of building a monolithic 100 000 line codebase, build 100 small services, each 100 lines long. Fred George, (the inventor of programmer anarchy) one of the biggest proponents of this approach, calls these small programs micro-services.

The micro-services approach is a radically different way of building systems. The services each perform a very limited task. This has the nice effect that they are easy to verify. You can eye-ball them. Testing is much less important.

On the human side, it also means that the code is easy to rewrite, even in a different language. If a junior engineer writes a bad implementation, you can throw it away. They can be written in pretty much any language. If you don’t understand the code, you throw it away and rewrite. Micro-services are easy to replace.

Micro-services communicate with each other by sending messages. You can send these messages directly over internal HTTP, or use a message queue for more scale. In fact, the transport mechanism does not matter all that much. From the perspective of the service, it just deals with whatever messages come it’s way. When you’re building services in Node.js, JSON is the most natural formatting choice. It works well for other languages too.

They are easy to scale. They offer a much finer grained level of scaling then simply adding more servers running a single system. You just scale the parts you need. We’ve not found the management of all these processes to be too onerous either. In general you can use monitoring utilities to ensure that the correct number of services stay running.

Death becomes relatively trivial. You’re going to have more than one instance of important services running, and restarts are quick. If something strange happens, just die and restart. In fact, you can make your system incredibly robust if you build preprogrammed death into the services, so that they die and restart randomly over time. This prevents the build up of all sorts of corruption. Micro-services let you behave very badly. Deployments to live systems are easy. Just start replacing a few services to see what happens. Rolling back is trivial – relaunch the old versions.

Micro-services also let you scale humans, both at the individual and team level. Individual brains find micro-services much easier to work with, because the scope of consideration is so small, and there are few side-effects. You can concentrate on the use-case in hand.

Teams also scale. It’s much easier to break up the work into services, and know that there will be few dependencies and blockages between team members. This is really quite liberating when you experience it. No process required. It flows naturally out of the architecture.

Finally, micro-services let you map your use-cases to independent units of software. They allow you to think in terms of what should happen. This let’s you get beyond the conceptual changes that objects impose.

Pattern Matching Rules

Micro-services can bring you a long way, but we’ve found that you need a way to compose them so that they can be reused and customised. We use pattern matching to do this.

This is once more about trying to think at the right level. The messages that flow between services need to find their way to the right service, in the right form, with the right preparation.

The pattern matching does not need to be complex. In fact, the simpler the better. This is all about making systems workable for human minds. We simply test the values of the properties in the message, and if you can match more properties than some other service, you win.

This simple approach makes it very easy to customise behaviour. If you’ve ever had to implement sales tax rules, you’ll know how tricky they can be. You need to take into account the country, perhaps the state, the type of good, the local bylaws. Patterns make this really easy. Start with the general case, and add any special cases as you need them. The messages may or may not contain all the properties. It’s not a problem, because special properties are only relevant for special cases anyway.

Cross-cutting concerns are also easy to support with pattern matching. For example, to log all the message related to saving data, simply grab those as they appear, make the log entry, and then send the message on its way. You can add permissions, caching, multiple databases. All without affecting the underlying services. Of course, some work is needed to layer up the pattern matching the way you need it, but this is straightforward in practice.

The greatest benefit that we have seen is the ability to compose and customise services. Software components are only reusable to the extent that they can be reused. Pattern matching lets you do this in a very decoupled way. Since all you care about is transforming the message in some way, you won’t break lower services so long as your transformations are additive.

A good example here is user registration. You might have a basic registration service that saves the user to a database. But then you’ll want to do things like send out a welcome email, configure their settings, verify their credit card, or any number of project-specific pieces of business logic. You don’t extend user registration by inheriting from a base class. You extend by watching out for user registration messages. There is very little scope for breakage.

Obviously, while these two strategies, micro-services, and pattern matching, can be implemented and used directly, it’s much easier do so in the context of a toolkit. We have, of course, written one for Node.js, called Seneca.

Galileo’s Moons

We’re building our business on the belief that the language tools that we have used to build large systems in the past are insufficient. They do not deliver. The are troublesome and unproductive.

This is not surprising. Many programming languages, and object-oriented ones in particular, are motivated by ideas of mathematical purity. They have rough edges and conceptual black holes, because they were easier to implement that way. JavaScript is to an extent guilty of all this too. But it is a small language, and it does give us the freedom to work around these mistakes. We’re not in the business of inventing new programming languages, so JavaScript will have to do the job. We are in the business of doing things better. Node.js and JavaScript help us do that, because they make it easy to work with micro-services, and pattern matching, our preferred approach to large-scale systems development.

In 1610, the great italian astronomer, Galileo Galilei, published a small pamphlet describing the discoveries he had made with his new telescope. This document, Sidereus Nuncius (the Starry Messenger) changed our view of the world.

Galileo had observed that four stars near the planet Jupiter behaved in a very strange way. They seemed to move in a straight line backwards and forwards across the planet. The only reasonable explanation is that there were moons orbiting Jupiter, and Galileo was observing them side-on. This simple realisation showed that some celestial bodies did not orbit the earth, and ultimately destroyed the Ptolemaic theory, that the sun orbited the earth.

We need to change the way we think about programming. We need to start from the facts of our own mental abilities. The thought patterns we are good at. If we align our programming languages with our abilities, we can build much larger systems. We can keep one step ahead of technical debt, and we can make programming fun again.